Insights

Driving Software Predictability Through Platform-enabled Quality Engineering

Mobin Thomas, Quality Engineering Team UST

In recent years, the need for a refined and lean Quality Engineering (QE) strategy has evolved with the advent of DevSecOps.

Mobin Thomas, Quality Engineering Team UST

Software Engineering Requires A Process Revolution.

In recent years, the need for a refined and lean Quality Engineering (QE) strategy has evolved with the advent of DevSecOps. With organizations focusing on a streamlined and early quality strategy, can the traditional levers of automation and analytics help achieve predictable software quality?

If we look at the evolution of quality control in the manufacturing industry, leading manufacturers adopted defect prediction models and six-sigma to identify defect sources and followed it up with investments in robotics and automation to streamline the process through smart and brilliant factories. This also meant the need for real-time data from sensors and decentralized decision-making through intelligence built on edge devices. The focus now moves upstream through investments to prevent supply chain disruptions and predict machine failures and preventive maintenance capabilities.

We believe software engineering is also in a similar trajectory. Most teams can perform automated tests and empirical data available across systems such as test management tools, application logs, and process-capability baselines. However, this information must be consolidated, synthesized, and correlated with converting data into intelligence. This is where a need for a Quality Engineering platform comes to play.

Future QE blueprints should put BOTs and intelligence at the center of the vision. The ‘brilliant software factories’ powered by ‘scrum teams’ should possess the ability to leverage autonomous and intelligent BOTs to be the first line of defect detection and assist engineers in performing higher-skilled tasks such as automation, application performance tuning, predictive performance modeling to prevent failures, all to focus on end-user experience through usability and user experience assurance.

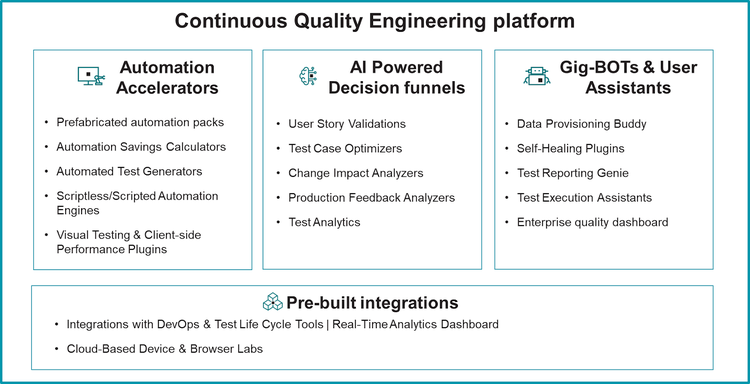

Core to this blueprint should be a platform that can help organizations realize this vision through a modular platform with plug-and-play architecture and pre-built integrations with leading industry tools. A Continuous QE platform requires four facets for success:

Automation Accelerators: The goal of these components should be to encourage standardization and reuse across the automation landscape. Building or partnering with tool vendors to build prefabricated test assets for applications can help jumpstart the automation initiatives. Additional accelerators that can help build end-to-end tests and improve test diversity and coverage can help amplify investments made on automation. This will help enterprises achieve the base maturity required to adopt the other components of the platform.

AI-Powered Decision Funnels: Leveraging AI to enable intelligent quality engineering practices is the next challenge enterprises should solve. The base use cases that can help move towards autonomous QE are capabilities are AI/ML-based prediction with reinforcement learning and feedback loops, end-user usage pattern predictions followed by prescriptive capabilities such as optimization, prioritization, and change impact analysis.

Gig-BOT & User Assistants: As enterprises build automation capabilities outside of QE, they can likely leverage those investments in QE as well. Identifying specific gigs that have been automated across the enterprise and leveraging those as part of the test execution is a great way to improve coverage and better ROI. The second set of BOTs should focus on eliminating manual effort in activities such as data mining, data creation, test reporting, report consolidation, etc.

Pre-Built Integrations: Given the diversity of tools across an enterprise, it is a good idea to build integrations across related tools and build a common data repository that can power the AI decision funnels and dashboards. The integrations can also help orchestrate tests better and efficiently.

With the adoption of a platform-based approach, organizations can successfully accelerate the adoption of a QE culture and significantly impact the time to market statistics.

This approach will also amplify the impact of existing automation investments and enable developers to self-certify code to a greater degree and move towards autonomous QE in the future.

Critical success factors: While the idea of autonomous BOT-enabled QE is enticing, this blueprint also requires a highly skilled team to facilitate this transition. It is critical to design a structured skill enablement roadmap that prepares associates for the next-gen QE implementations. This requires building a certification plan to nurture full-stack QE talent with varied degrees of experience across SDETs, full-stack Test Engineers, and full-stack Architects. It is recommended to have a good blend of talent with these experiences for this strategy to work. These trainings can be extended to developers as organizations drive towards making QE everyone’s responsibility.

The second aspect is the availability of reliable data across sources, which the algorithms must use in the decision making. For example, the risk- and impact-based test recommenders rely on the accuracy of the test execution data and appropriate classification of production incidents. We see the need for quality scientists who can help develop strategies to harvest the information from the ecosystem of APM tools, application logs, test management tools, execution logs, and CI/CD pipeline data to enable the autonomous QE vision for the enterprise.

Looking beyond: shifting focus from application to user experience

From a non-functional testing standpoint, The initial MVPs of the platform development should focus on features such as the automated discovery of static-code-analysis-based security vulnerabilities and accessibility compliance checks along with client-side performance simulation and analytics. The functional test scripts should also possess self-healing capabilities to adapt to incremental changes to applications. While in the short-term, organizations may adopt these QE best practices and get closer to the autonomous testing vision, non-functional quality gates remain a capability that needs further research and development.

As organizations continue to make quality everyone’s responsibility and more sprint-level defect containment, we prepare for a future with self-learning and self-managing QE platforms with advanced AI and analytics.

Talk to our experts to understand how UST’s NoSkript can help your organization assess your current landscape and roadmap to co-create with a customer and quality first journey.

To learn more about Request to Pay, download our brochure here.